Table of Contents

How does it work?

Process

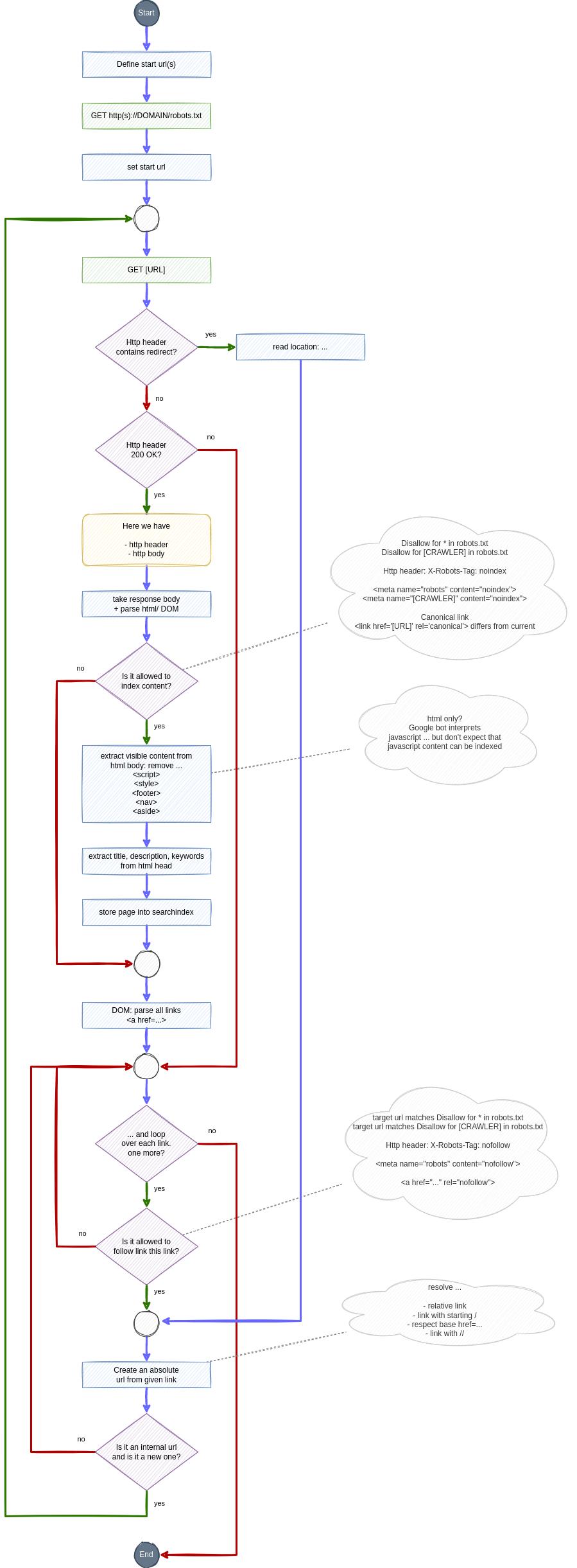

I created a drawing to show what happens when spidering a website to get its content to store it for a website. This is what the ahCrawler does.

Next to this crawling there is a 2nd resource crawling for the link analysis and more.